I wanted to do a real write up on this. It’s so much work and I’m swamped these days. I still want to share it with the world. We’re going to cover the general architecture because that’s all I have the energy for right now. I didn’t have time to clean the code up.

To start, a brief (1:34) video intro if you’re interested:

—

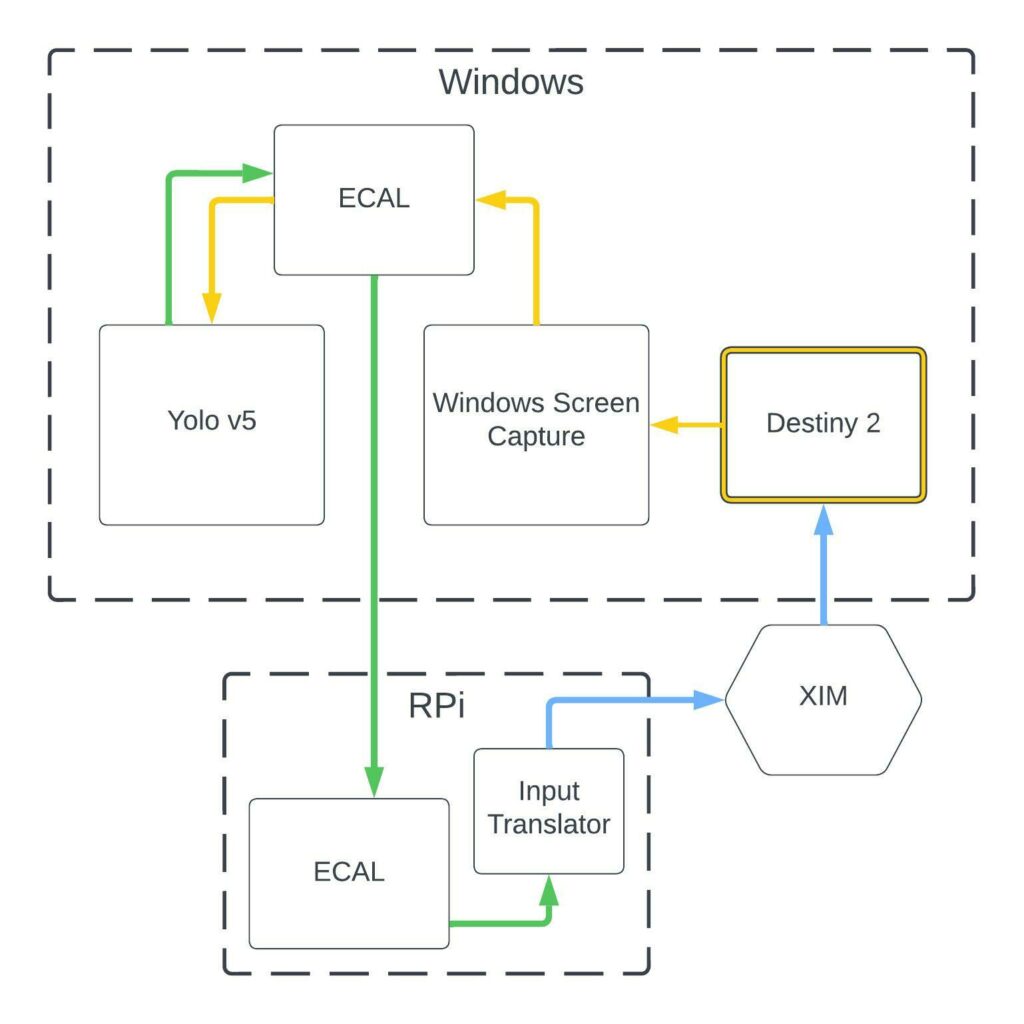

Architecture:

Acknowledgments:

Openpose implementation for C++

spacewalk01/tensorrt-openpose: TensorRT C++ Implementation of openpose (github.com)

Yolov5 Training and toolchain:

ultralytics/yolov5: YOLOv5 🚀 in PyTorch > ONNX > CoreML > TFLite (github.com)

Yolo v5 implementation for C++:

shouxieai/tensorRT_Pro: C++ library based on tensorrt integration (github.com)

Screen Capture:

My Code:

The code and license here are based off of the NVidia desktop duplication sample. That’s actually not in use. That was the original screen capture base. It’s hot garbage. But you can see the pile grow in the commits if you want:

rlewkowicz/screencap-to-cvmat (github.com)

The screen cap:

rlewkowicz/Win32CaptureSample: A simple sample using the Windows.Graphics.Capture APIs in a Win32 application. (github.com)

The translator:

TLDR: I never committed on this. Still could, just a lot to tidy.

So the Pi is probably one of the coolest parts about this. You can write your own device descriptors and make the pi a usb device. I had to hardwire the GPIO pins for power and then use the USBC port as the hardware device. Its so cool. I need to write on this if I can find the time.